By Sarah Yang. Delivery services may be able to overcome snow, rain, heat and the gloom of night, but a new class of legged robots is not far behind. Artificial intelligence algorithms developed by a team of researchers from UC Berkeley, Facebook and Carnegie Mellon University are equipping legged robots with an enhanced ability to adapt to and navigate unfamiliar terrain in real time.

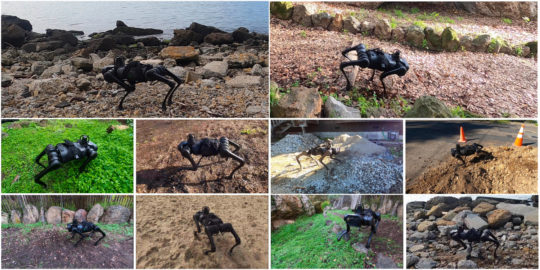

Their test robot successfully traversed sand, mud, hiking trails, tall grass and dirt piles without falling. It also outperformed alternative systems in adapting to a weighted backpack thrown onto its top or to slippery, oily slopes. When walking down steps and scrambling over piles of cement and pebbles, it achieved 70% and 80% success rates, respectively, still an impressive feat given the lack of simulation calibrations or prior experience with the unstable environments.

Rapid Motor Adaptation-enabled test robot traverses various types of terrain. (Images courtesy Berkeley AI Research, Facebook AI Research and Carnegie Mellon University)

Not only could the robot adjust to novel circumstances, but it could also do so in fractions of a second rather than in minutes or more. This is critical for practical deployment in the real world.

The research team presented the new AI system, called Rapid Motor Adaptation (RMA), at the 2021 Robotics: Science and Systems (RSS) Conference.

“Our insight is that change is ubiquitous, so from day one, the RMA policy assumes that the environment will be new,” said study principal investigator Jitendra Malik, a professor at UC Berkeley’s Department of Electrical Engineering and Computer Sciences and a research scientist at the Facebook AI Research (FAIR) group. “It’s not an afterthought, but a forethought. That’s our secret sauce.”

Previously, legged robots were typically preprogrammed for the likely environmental conditions they would encounter or taught through a mix of computer simulations and hand-coded policies dictating their actions. This could take millions of trials — and errors — and still fall short of what the robot might face in reality.

“Computer simulations are unlikely to capture everything,” said lead author Ashish Kumar, a UC Berkeley Ph.D. student in Malik’s lab. “Our RMA-enabled robot shows strong adaptation performance to previously unseen environments and learns this adaptation entirely by interacting with its surroundings and learning from experience. That is new.”

The RMA system combines a base policy — the algorithm by which the robot determines how to move — with an adaptation module. The base policy uses reinforcement learning to develop controls for sets of extrinsic variables in the environment. This is learned in simulation, but that alone is not enough to prepare the legged robot for the real world because the robot’s onboard sensors cannot directly measure all possible variables in the environment. To solve this, the adaptation module directs the robot to teach itself about its surroundings using information based on its own body movements. For example, if a robot senses that its feet are extending farther, it may surmise that the surface it is on is soft and will adapt its next movements accordingly.

The base policy and adaptation module are run asynchronously and at different frequencies, which allows RMA to operate robustly with only a small onboard computer.

Other members of the research team are Deepak Pathak, an assistant professor at CMU’s School of Computer Science, and Zipeng Fu, a master’s student in Pathak’s group.

The RMA project is part of an industry-academic collaboration with the FAIR group and the Berkeley AI Research (BAIR) lab. Before joining the CMU faculty, Pathak was a researcher at FAIR and a visiting researcher at UC Berkeley. Pathak also received his Ph.D. degree in electrical engineering and computer sciences from UC Berkeley.

This article originally appeared in Berkeley Engineering, July 9, 2021, and is re-posted with permission in the UC IT Blog.