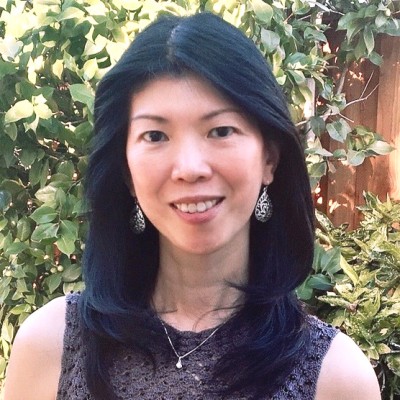

By Brendon Phuong and Laurel Skurko. Anita Ho, an associate professor in the UCSF Bioethics Program, recently discussed her insights and experience in collecting and using data in the health care setting with the UC Tech News team. She emphasized the significance of gaining the trust of those who provide the data. This can be achieved if the data governance system is well-structured in ways that suit the needs of the very individuals providing the data. During the conversation, she explored strategies for improving data governance and described her current focus on mental health chatbots and how AI can be applied responsibly to address challenges in addressing mental health care delivery challenges.

Listen to Anita Ho describe her work on YouTube.

Ethics and data privacy in healthcare and academic settings

When considering ethics and data privacy, we often focus on obtaining consent for data collection and use. In academic health centers, there is an emphasis on data collection and consent because we operate within a learning health system. Therefore, we need to gather and utilize data to enhance patient care. Patients need to trust that we are using their data for learning and improvement. In the academic context, ethics and data privacy should be approached deliberately. This will ensure responsible data collection and use. There is an opportunity to build trust and encourage data sharing provided we clearly communicate to patients the data’s purpose and goals regarding its future use by the institution.

What “big data” means when it comes to patient consent

In today’s era of big data, data can be collected for one purpose, but later used for another. Big data allows us to combine and merge datasets, offering a broader perspective, particularly in precision medicine where diverse data streams can be invaluable. It is therefore crucial to establish and communicate the parameters around how the data will be used. This requires trust in individual researchers and the entire governance system responsible for managing and authorizing the appropriate use of data. This trust in the governance structure allows data to extend beyond a single project and have a larger impact on society.

Why it might be counterintuitive to ask patients too many questions when collecting data

Our team recently completed a project on cluster trial ethics and data use, focusing on health service delivery. In these trials, we often worked with data that was not individually identifiable. This raised the question of whether we should still seek consent from individuals who might become part of the dataset. One of our patient advisors shared their perspective that excessive requests for consent could make people apprehensive. When patients were repeatedly asked for permission and presented with intricate documents, they started suspecting hidden motives. Sometimes, requesting permissions too frequently and presenting complex documents does not effectively convey the purpose of data collection.

How to improve marketing messaging in healthcare

A few months ago, I attended a talk on the “10,000 steps” concept, which aims to encourage physical activity and includes a tracking device to monitor progress. Although there were important strategies discussed, many did not consider the broader social environment. For individuals living in areas with high levels of gun violence, limited sidewalks, and no parks, getting to 10,000 steps was not feasible. Even when offered a tracking device to help motivate continual improvement, the environmental barriers would dissuade participation/progress. This raises the question of how we can promote healthy activities, while addressing the social obstacles that some people face. This also points to the importance of also working to avoid stigmatizing individuals who cannot participate for reasons outside their control.

Examples like this highlight the fact that, although health promotion campaigns attempt to inspire individuals to adopt certain behaviors, they may fail for two reasons: (1) social determinants of health (i.e. a dangerous walking environment) may hinder one’s ability to participate; and (2) people may feel penalized for not meeting activity requirements (i.e. daily step count) when being monitored.

Mental health chatbots – ethical analyses to ensure value

I currently want to focus more on mental health products, particularly chatbots. I am interested in conducting ethical analyses to better understand the role of these technologies in addressing mental health issues. I am not against using these products, but I aim to explore what technologists can do to ensure these services can help individuals with mental health concerns.

Behavioral and mental health issues often carry a significant stigma, leading many to avoid seeking in-person care. Chatbots can address this concern because they can offer accessible support at any time that is also anonymous. However, there are concerns that these technologies inadvertently reinforce problematic behaviors, such as addiction and boundary crossing.

My interest lies in developing these applications, especially with the use of generative AI, in a way that instills confidence and serves the right purpose. These technologies must prioritize the well-being of individuals over engagement, since excessive (or obsessive) engagement can contribute to the very issues we are trying to address in the first place.

Related reading

Live Like Nobody Is Watching: Relational Autonomy in the Age of Artificial Intelligence Health Monitoring

Oxford University Press (by Anita Ho)

May 2, 2023

Contact

Anita Ho, PhD, MPH

Associate Professor

UCSF Bioethics Program

Author

Brendon Phuong

Marketing and Communications Intern

UC Office of the President

Laurel Skurko

Marketing & Communications Director

UC Office of the President